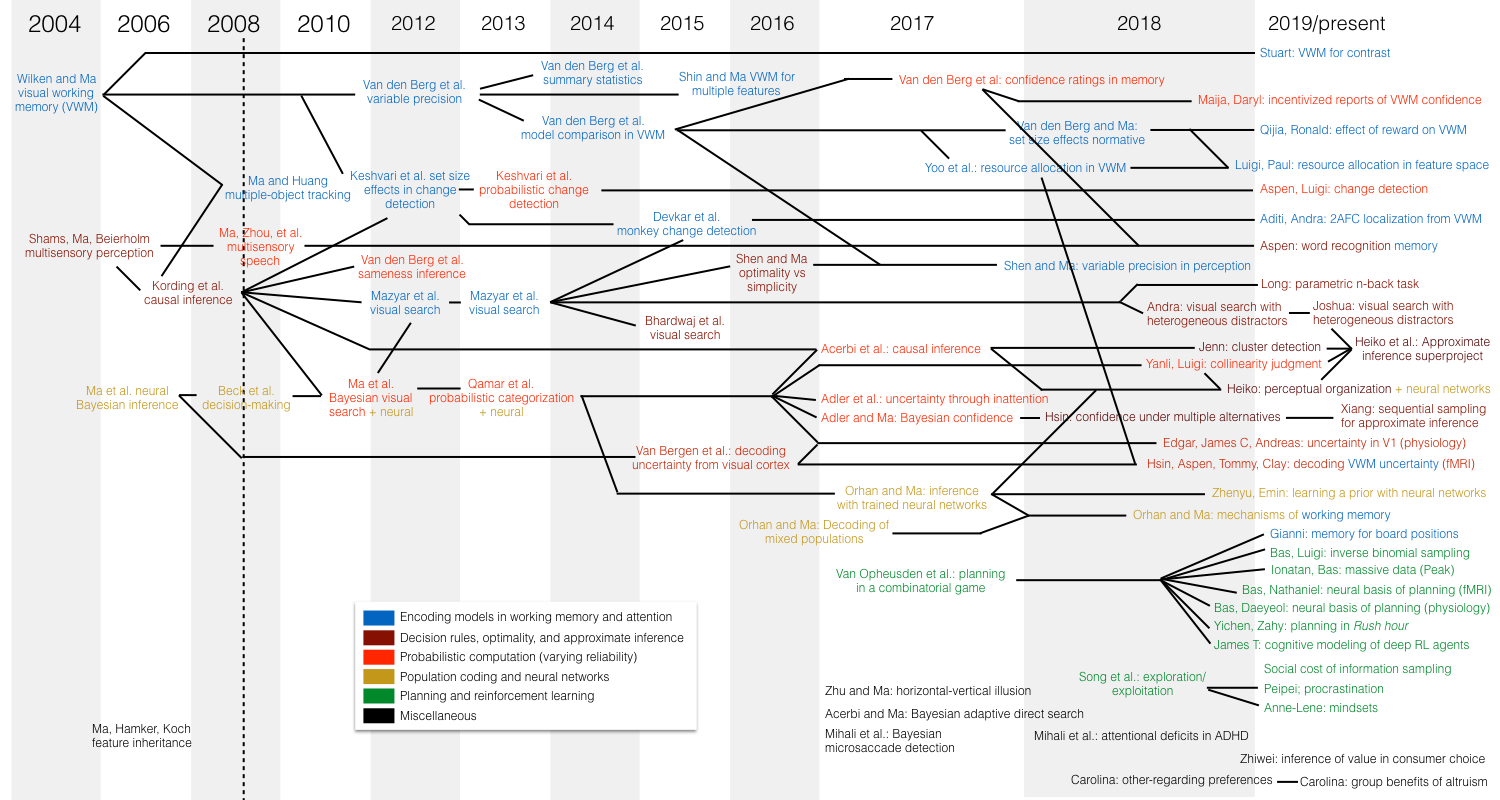

Origin tree of projects in the Ma Lab. Each project is part of a lineage of projects. This diagram shows how projects followed from each other, color-coded by topic area. Created on Apr 27, 2019 by Wei Ji Ma. Click for a bigger version.

We aim to understand the strategies and algorithms of human decision-making using behavioral experiments, mathematical modeling, and neural networks. We are also interested in the neural basis of decision-making. Some of our work has more to do with encoding and representation than with decision-making.

The lab's current focus areas are

- Sequential decision-making and planning

- Social decision-making

- Approximate inference and resource rationality

We have also worked extensively on visual and multisensory perception, working memory, visual attention, theories of neural coding and perceptual computation, experimental tests of these theories, exploration/exploitation, and social networks, but those are currently less active.

Our neural modeling work falls into a tradition that takes behavioral data and the problems faced by the organism as starting points to understand neural processes; for this, we use language of probability theory and machine learning. Our modeling usually starts with principles of optimality or rationality, but often ends up having suboptimal twists. This contrasts with another tradition, rooted in physics, in which neural measurements and in particular temporal dynamics are central, and theories are formulated in terms of differential equations and dynamical systems.

Sequential decision-making and planning

Planning can be defined as the mental simulation of futures, as in navigation, career planning, programming, or national policy. Cognitive science has mostly studied planning in relatively simple sequential decision tasks, where simplicity could for example be measured by the size of the state space. Many real-world decision-tasks are more complex. In various projects, we are exploring the way people plan (mentally simulate future states) in relatively complex tasks, where exhaustive calculation is intractable for people, but we can still have experimental control and we can fit and compare models.

-

Combinatorial planning in a two-player game (Bas van Opheusden, Zahy Bnaya, Gianni Galbiati, Yunqi Li). Psychologists have long been interested in chess, because it might teach us about the nature of expertise and about the types of heuristics that people when the decision tree is large. However, chess is difficult to model quantitatively. Instead, we use a simpler game, akin to tic-tac-toe. For this game, we are developing an AI-inspired quantitative model that can simultaneously predict people's choices, position evaluations, reaction times, and eye movements.

- Play the game and explore the data

- Van Opheusden B, Galbiati G, Bnaya Z, Li Y, Ma WJ (2017), A computational model for decision tree search. Proceedings of the 39th Annual Meeting of the Cognitive Science Society, 1254-1259. PDF

- Van Opheusden B, Galbiati G, Bnaya Z, Ma WJ (2016), Do people think like computers? Computers and Games 2016, Leiden, The Netherlands, Series Volume 10068. Eds. Plaat A, Kosters W, Van den Herik J 212-224. PDF

- Combinatorial planning in a two-player game - big data (Ionatan Kuperwajs and Bas van Opheusden). We have partnered with Peak Brain Training to put four-in-a-row on their mobile game platform. We now have tens of millions of games that we can use to ask questions about learning and strategy.

- Combinatorial planning in a one-player game (Yichen Li and Zahy Bnaya). We use Rush Hour to study how people plan in a deterministic game with a large decision tree.

- Procrastination (Peipei Zhang) We build reinforcement learning models of procrastination.

- Information sampling in a trust game. See "Social decision-making" below.

- Collaborative planning. When planning collaboratively with a partner to solve a task, an agent needs to build beliefs over the background knowledge, current knowledge, policy, and goals of the partner. We currently have three projects in which collaboration is crucial.

Social decision-making

- Characterizing generosity (Carolina di Tella and Paul Glimcher). If you can choose between $40 for yourself and $20 for me, or $30 for yourself and $50 for me, which would you choose? Economists have come up with different utility functions of how people weigh what they get themselves and what others get. We test these models using maximally informative experimental trials, both when the other is a friend and when the other is a stranger.

- Is altruism intuitive? (Carolina di Tella). We ask whether having a heavy cognitive load makes people more or less altruistic.

- Altruism and group selection (Carolina di Tella). We ask whether altruism has benefits when groups compete.

- Information samping in a trust game. To determine whether someone can be trusted, you need to gather information about that person. However, if the other feels that you are gathering too much information about them, this might itself hamper trust building. This project investigates how these two social costs trade off against each other.

- Effects of race on emotion judgment (Jenn Laura Lee and Jon Freeman). In judging whether a face is happy or angry, racial biases might play a role. Using parametrically varying emotional face stimuli, we have collected behavioral data on how people judge emotion on a fine scale. We are now modeling these data using a drift-diffusion model.

Approximate inference and resource rationality

While we have extensive experience in the lab comparing Bayesian/optimal to alternative models (e.g. Shen and Ma 2016), those alternatives are often not very satisfactory, because they are often specific to the task. We are interested in more general approximate frameworks.

- Resource-rational attention and working memory (Ronald van den Berg, Aspen Yoo) In one recent paper (Van den Berg and Ma, 2018), we conceptualized set size effects in working memory as resulting from an optimal trade-off between performance and cost. This is an example of a resource-rational theory. In a related paper (Yoo et al., 2018), we examined how attention is near-optimally allocated to items with different behavioral relevance.

- Effect of reward on working memory (Ronald van den Berg and Qijia Zou) In a follow-up study, we ask whether paying people more can improve their working memory.

- Partially committal observers (Jenn Laura Lee) In a spatial cluster detection task, we ask whether the brain, when doing a categorization task with nuisance parameters, commits to specific values (point estimates) of those nuisance parameters, or maintains full probability distributions over them.

- Approximate inference superproject (Heiko Schütt and others) Across five different data sets, we ask whether the brain uses partial committal algorithms when making category decisions.

- Approximate inference through sequential sampling (Xiang Li and Luigi Acerbi) We ask if the brain is doing something like sequential Bayesian optimization in multi-alternative decision-making.

Visual and multisensory perception

Most of our work on visual and multisensory perception focuses on questions of inference and probabilistic representation. In Bayesian inference, an observer builds beliefs about world states based on observations and assumptions about the statistical structure of the world. If the assumptions are correct, then the Bayesian observer achieves optimal performance. When necessary, Bayesian observers integrate pieces of information in a way that takes into account the uncertainty of these pieces. We call this probabilistic computation. There is evidence from simple perceptual tasks that humans and monkeys perform probabilistic computation and are sometimes close to optimal. Relatively little work has been done in more complex perceptual decisions, such as extracting visual structure or categories from simple features. We are interested both in optimality and in probabilistic computation in such tasks, in particular ones in which the observer needs to integrate information from multiple items into a global, categorical judgment. We are also interested in confidence ratings.

Current projects:

- Are confidence ratings based on posterior probabilities? Hsin-Hung Li

- Does uncertainty get taken into account in collinearity detection? Yanli Zhou, Luigi Acerbi

- How does the brain resolve visual ambiguity in the aperture problem? Edgar Walker

- Causal inference in auditory-visual perception. Luigi Acerbi and Trevor Holland

- How does the brain detect and discriminate clusters? Jenn Laura Lee

We study a categorization task in which taking sensory uncertainty into account would help categorization performance, and ask whether people indeed do so.

Causal inference in multisensory perception | PLoS ONE 2007 | Frontiers in Psychology 2013 | PLoS Computational Biology 2018When you hear a sound and see something happening at the same time, these two stimuli might or might not have anything to do with each other. The brain must figure out which stimuli belong together and which don't.

Sameness judgment | PNAS 2012Judging whether a set of stimuli are the same or different is an important cognitive function and might underlie more abstract notions of similarity and equivalence.

Confidence ratings | Psychological Review 2017 | Neural Computation 2018We ask whether confidence ratings are based on posterior probabilities, and if so, how criteria are placed.

Visual search. See "Visual attention" below. Change detection. See "Working memory" below. Neural implementation. See "Neural coding and computation" below.Working memory

Traditional models of working memory assert that items are remembered in an all-or-none fashion. We have shown that more important than quantity limitations are quality limitations: the memory of each item is noisy and the more items have to be remembered, the higher the noise level. This type of model is also called a "resource model". In another line of work, we try to take the decision stage of working memory tasks seriously; for example, to understand change detection, one has to understand the process of comparing the remembered stimuli with the current stimuli. Along these lines, we are currently examining whether working memory contains a useful representation of uncertainty. Finally, we recently wrote about what determines whether a neural network maintains a memory using persistent activity versus sequential activity.

Current projects:

- Incentivized reports of working memory confidence (Maija Honig and Daryl Fougnie)

- How is luminance contrast remembered? Stuart Jackson

- What is the source of interference in the n-back task? (Long Ni)

- Bayesian models of word recognition memory (Aspen Yoo)

- How is visual working memory uncertainty encoded in cortical activity? (Aspen Yoo, Tommy Sprague, and Clay Curtis)

- Memory of patterned stimuli, and the effects of feature expertise (Gianni Galbiati)

- Delayed localization: a new working memory paradigm (Aditi Singh and Andra Mihali)

Review of the resource view of working memory | Nature Neuroscience 2014

Neural basis of working memory | Nature Neuroscience 2019

Variable precision | PNAS 2012 | Psychological Review 2014

Review of the resource view of working memory | Nature Neuroscience 2014

Neural basis of working memory | Nature Neuroscience 2019

Variable precision | PNAS 2012 | Psychological Review 2014 These papers develop the theory that the precision of encoding an item in working memory is variable from trial to trial and from item to item.

Resource-rational theory of set size effects | eLife 2018We propose a conceptually new way of thinking about resource limitations: not as a strict limitation, but as the result of a rational trade-off between performance and neural cost. The law of diminishing returns makes an appearance.

Delayed estimation | Journal of Vision 2004 | PNAS 2012 | Psychological Review 2014This is a task we introduced in working memory studies. Observers estimate the value of a remembered stimulus feature on a continuum. Models of set size effects are frequently tested using delayed estimation.

Change detection and change localization | Current Biology 2011 | PNAS 2012 PLOS ONE 2012 | PLOS Computation Biology 2013 | PDF | Journal of Vision 2017Classic tasks with a twist: we always systematically vary the magnitude of change. Our main questions: what processes underlie the dependence of change detection behavior on set size; does uncertainty (reliability) get taken into account in change detection decisions? We have asked these questions both in humans and in non-human primates. Our main modeling framework combines limited resources, variable precision, and Bayesian inference. See also the data and code available here.

Visual attention

We have several projects on distributed attention and several on selective attention. Our distributed attention projects use visual search using simple, briefly presented stimuli. First, we examine the effects of the number of items (set size) on precision and performance, similar to working memory. Signal detection theories of visual search often do not contain resource limitations. We have shown that resource limitations are the rule rather than the exception. Second, we ask how close decision-making in visual search is to optimal. Third, we ask how distractor heterogeneity (diversity) affects search behavior. Signal detection theory studies of visual search have usually focused on homogeneous distractors, for computational tractability. However, homogeneous distractors are not very natural. We try to do better by studying heterogeneous distractors, still in a signal detection theory context. In the realm of selective attention, we have worked on the role of uncertainty in attention (probabilistic computation), detecting microsaccades, and attentional deficits in ADHD.

Current projects:

- How to optimally allocate attention? Nuwan de Silva

- How do task, memory demands, and stimulus spacing affect visual search with heterogeneous distractors? Andra Mihali

- How do various distractor statistics affect visual search with heterogeneous distractors? Joshua Calder-Travis

These papers compare optimal against heuristic decision rules in visual search.

Distributed attention: set size effects | Journal of Vision 2012 | Journal of Vision 2013These papers study the effects of set size on visual search decisions.

Distributed attention: heterogeneous distractors | Nature Neuroscience 2011 | Journal of Vision 2012 | Journal of Vision 2013 | Neural Computation 2015 | PLoS ONE 2016These papers use heterogeneous distractors in visual search.

Distributed attention: multiple-object tracking | Journal of Vision 2009Tracking multiple objects at once requires dividing attention, but it also has an inference (decision-making) component.

Selective attention: the role of uncertainty | PNAS 2018We asked whether the brain takes into account the level of uncertainty in setting decision criteria, when uncertainty is manipulated through attention.

Selective attention: ADHD | Computational Psychiatry 2018We use a new experimental paradigm and a computational model to separately characterize perceptual and executive deficits.

Selective attention: detecting microsaccades | Journal of Vision 2017Microsaccades have been used as a marker of selective attention. This paper develops a Bayesian method to detect microsaccades in noisy eye tracker data.

Neural coding and neural computation

Bayesian inference is a successful mathematical framework for describing how humans and other animals make perceptual decisions under uncertainty. This raises the question how neural circuits implement, and learn to implement, Bayesian inference. Our lab has developed theories for such implementation; these theories establish experimentally testable correspondences between neural population activity and Bayesian behavior. We have proposed how Bayesian cue combination could be implemented using populations of cortical neurons; we call this form of coding probabilistic population codes (PPCs). Physiologists have since confirmed several predictions arising from this framework. We have generalized the theories to more complex computations, including decision-making, visual search, and categorization, often including detailed human behavioral experiments. We have shown that behaviorally relevant perceptual uncertainty can be decoded from fMRI activity. Most recently, we discovered that generic neural networks can easily learn to approximate Bayesian computation. Ongoing NIH-funded research in collaboration with the laboratory of Andreas Tolias strives to elucidate how neural populations encode uncertainty in primary visual cortex.

Current projects:

- How is uncertainty encoded and propagated in visual categorization? Edgar Walker and Andreas Tolias.

Foundational paper of probabilistic population codes, with an application to cue combination.

Decision-making | Neuron 2008Tasks in which the observer accumulates evidence over time. We introduce an alternative to the drift-diffusion model.

Experimentals tests of the likelihood component of probabilistic population codes | Nature Neuroscience 2015In these papers, we show that behaviorally relevant likelihood functions (and associated uncertainty) can be decoded on a trial-by-trial basis from either the BOLD response in early visual cortex or from multi-unit activity in V1 .

Physiological tests of Poisson-like variability | Journal of Neuroscience 2012In this paper, we examine some of the basic predictions of Poisson-like PPCs in monkey primate visual cortex.

More complex probabilistic inference with PPCs | Nature Neuroscience 2011 (visual search) | PNAS 2013 (categorization) | Multisensory Research 2013 (causal inference)These papers describe how more complex computations could be implemented using probabilistic population codes.

Neural population coding of multiple stimuli | Journal of Neuroscience 2015We ask what happens if a single population has to encode multiple stimuli.

Probabilistic inference with generic neural networks | Nature Communications 2017This paper uses a radically different approach from the papers above: instead of manually constructing networks, we train very simple neural networks, with comparable results. This paper reflects our current thinking on the neural implementation of Bayesian computation.

Neural mechanisms of working memory | Nature Neuroscience 2019In neural networks, we examine what task properties and what intrinsic network properties determine whether the mechanisms of maintenance of working memory are more sequential (across the population) or more persistent (within single neurons).